n this lesson we are going to take a brief familiarization of a typical block diagram of a cellphone.

Block Diagram can help us understand the flow of a certain part of a cellphone's circuit.

A Cell-phone handset is basically composed of two sections,

which is RF and Baseband Sections.

RF

RF refers to radio frequency, the mode of communication for wireless

technologies of all kinds, including cordless phones, radar, ham radio,

GPS, and radio and television broadcasts. RF technology is so much a

part of our lives we scarcely notice it for its ubiquity. From baby

monitors to cell phones, Bluetooth® to remote control toys, RF waves are

all around us. RF waves are electromagnetic waves which propagate at

the speed of light, or 186,000 miles per second (300,000 km/s). The

frequencies of RF waves, however, are slower than those of visible

light, making RF waves invisible to the human eye.

Baseband

In signal processing, baseband describes signals and systems whose range

of frequencies is measured from zero to a maximum bandwidth or highest

signal frequency. It is sometimes used as a noun for a band of

frequencies starting at zero.

In telecommunications, it is the frequency range occupied by a message signal prior to modulation.

It can be considered as a synonym to low-pass.

Baseband is also sometimes used as a general term for part of the

physical components of a wireless communications product. Typically, it

includes the control circuitry (microprocessor), the power supply, and

amplifiers.

A baseband processor is an IC that is mainly used in a mobile phone to process communication functions.

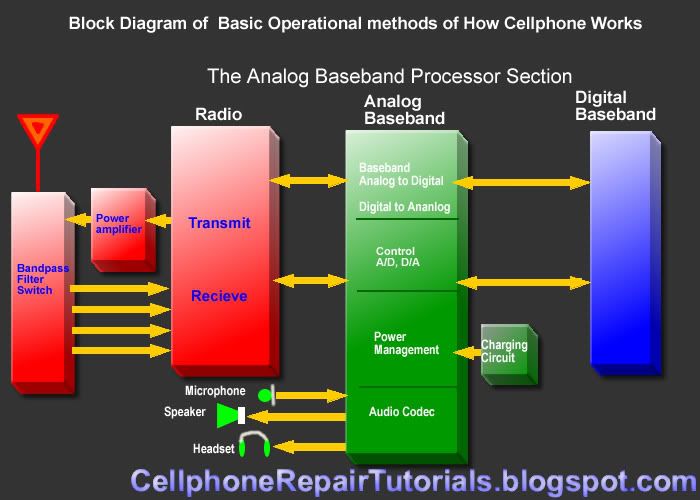

Basically Baseband also composed of to sections which is the Analog and

Digital Processing Sections. So, we are going to separate each other

for better and easier to understand.

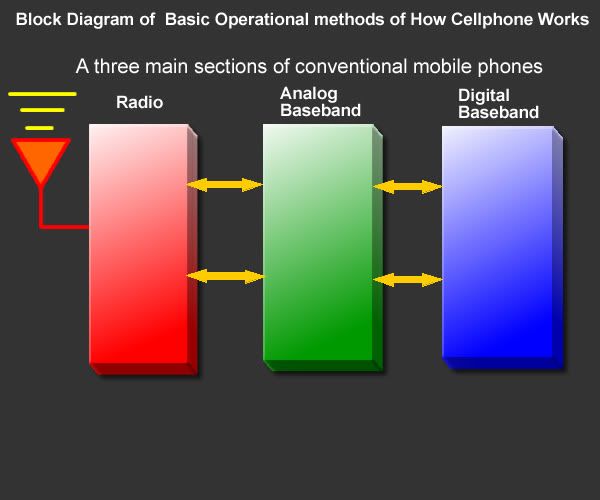

Cell-phone have three different sections which is the following.

I prepare this to be simple and easy instead of using or explaining it with deep technical terms .

In this manner, it is easy for us to understand the concepts and methods of how basically the cellphone works.

Cell-phone have three sections since baseband is differentiated by into

two which is the Analog and Digital function while the RF section

remains as a whole circuit section.. which is the following cosists.

1. Radio Frequency (RF Section)

2. The Analog Baseband Processor

3. And the Digital Baseband Processor.

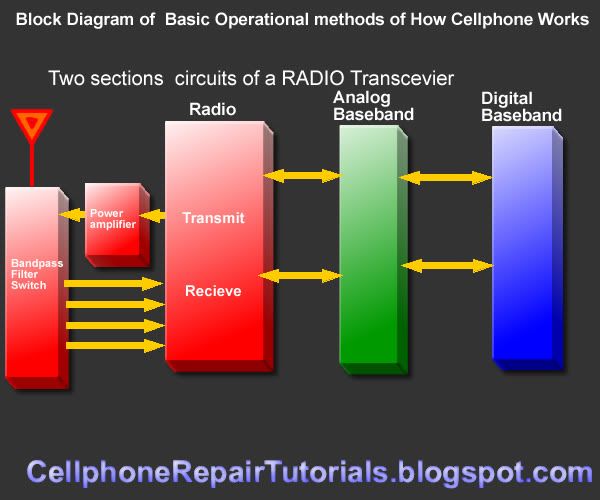

Radio Frequency Processing Section

The RF section is the part of the cell-phone circuit is also known as RF Transceiver.

It is the section that transmit and receive certain frequency to a network and synchronize to other phone.

The RF - A radio section is based on two main Circuits.

1 Transmitter

2 Reciever

A simple mobile phone uses these two circuits to correspond to an other

mobile phone. A Transmitter is a circuit or device which is used to

transmit radio signals in the air.and a reciever is simply like radios

which are used to recieve transmissions(Radiation) which is spread in

the air by any transmitter on a specific frequency.

The two way communication is made possible by setting two transmitters

and two recievers sycronized in this form that a trasmitter in a cell

phone is syncronised with the frequency of other cell phone's recieving

frequency same like the transmitter of second cell phone is syncronised

with the recieving frequency of first cell phone. So first cell phone

transmits its radiation in the air while the other phone listens it and

same process is present in the opposit side. so these hand held two cell

phones correspond to one another.

the technology used in these days is a little bit different but it is

based on the basic theory prescribed before. the today's technology will

be discussed in later on.

Analog Baseband Processor

A/D and D/A section

The analog baseband processing section is composed of different types of circuits.

This section converts and process the analog to digital (A/D) signals and digital to analog signals (D/A).

Control section

This is the section acts as the controller of the the input and output of any analog and digital signal.

Power Management

A power management section in mobile phones is designed to handle energy

matters that is consumed in mobile phones. There are two main sub

sections in a single power section.

• Power Distribution and switching section

• Charging Section

A power distribution section is designed to distribute desired Voltages

and currenst to the other sections of a phone. this section takes power

from a battery (which is figured commonly 3.6 Volts)and in some places

it converts or step down to various volts like 2.8 V 1.8V 1.6V

etc.while on other place it also

steps up the voltage like 4.8 V. this section is commonly designed

around a power IC(and integrated circuit) which is used to distribute

and regulate the voltage used in other components.

The Charging section is based on a charging IC which takes power from an

external source and gives it to battery to make it again power up when

it is exhausted. this section uses convertibly 6.4 V from an external

battery charger and regulates it to 5.8V wile giving it to battery. The

battery is made charged by this process and it is ready to use for the

next session (a battery session is a time which is provided by the

manufacturer of a cell phone for standby by condition of a mobile phone

or talk condition.)

Audio Codecs Section

This section where analog and digital audio properties being process

like the microphone, earpiece speaker headset and ring-tones and also

the vibrator circuits.

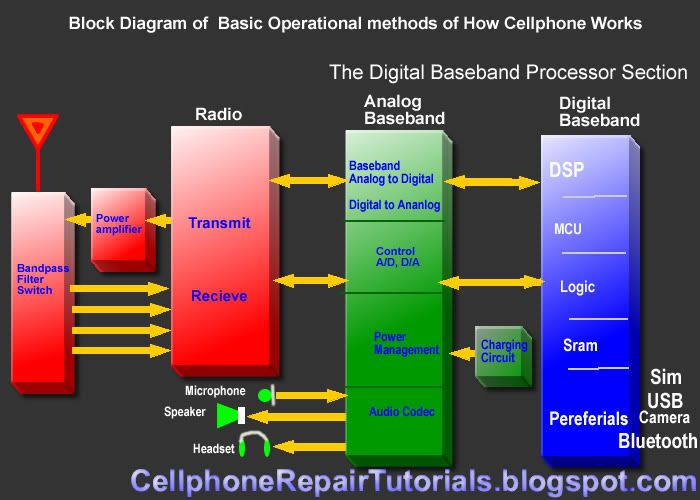

Digital Baseband Processor

This is the part where All Application being process. Digital Baseband

Processor section is used in mobile phones to handle data input and

ouput signal like switching, driving applications commands and memory

accessing and executing.

These are the parts and sections o a Digital Baseband Circuit were installed.

CPU

CPU( Centeral Processing Unit) The Central Processing Unit (CPU) is

responsible for interpreting and executing most of the commands from the

users interface. It is often called the "brains" of the microprocessor,

central processor, "the brains of the computer"

Flash and Memory Storage Circuits

*RAM( Random Access Memory)

*ROM,Flash(Read Only Memory

Interfaces such as the following were also part on this section:

*Blutooth

*Wi-fi

*Camera

*Screen Display

*Keypads

*USB

*SIM-Card

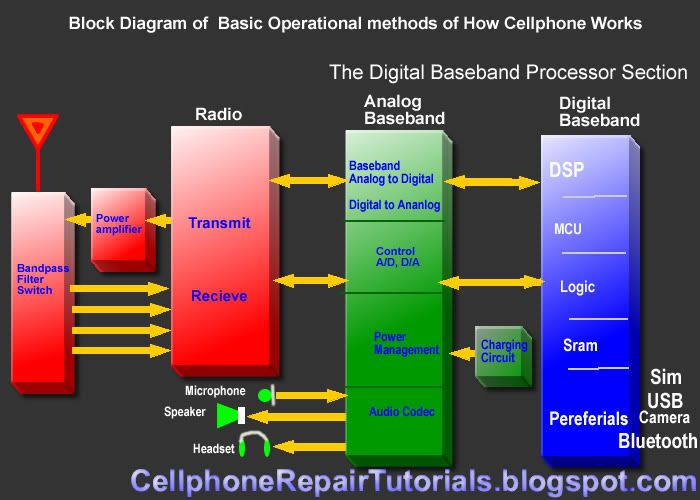

Here a typical overview of a block diagram on latest mobile phone designs.

Various mobile phones have different concepts and design

on every aspects, but the methods and operational flow are all exactly

the same. It differs on how and what certain IC chips and parts they are

being used and installed to a certain mobile phone circuitry.

*1 AAC-101 is a unit to convert MPEG1, the audio data used with video editor software, into AAC, the one for digital terrestrial broadcasting.

*1 AAC-101 is a unit to convert MPEG1, the audio data used with video editor software, into AAC, the one for digital terrestrial broadcasting.